Custom Parsers Overview

Tip

The Secureworks Professional Services team is here to help you realize the full potential from your Taegis XDR investment if a higher level of support is desired. Our highly skilled consultants can help you deploy faster, optimize quicker, and accelerate your time to value. For more information, see Professional Services Overview.

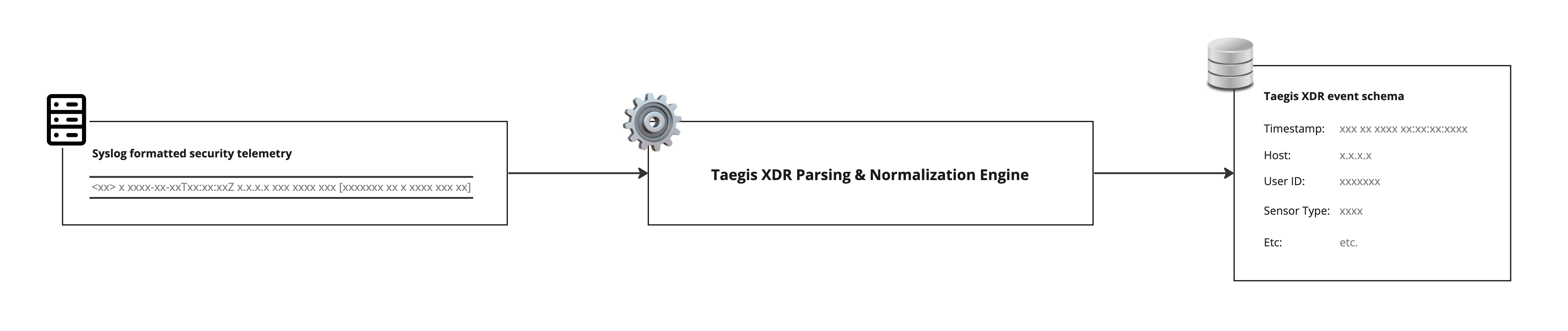

How XDR Ingests and Normalizes Syslog Telemetry ⫘

Syslog Ingestion

Event Schemas ⫘

When syslog data is sent to XDR, it runs through a parsing engine and is normalized to one or more of the 20+ event schemas. An event schema is a standardized way of looking at various types of security event data. For example, user authentication logs are usually normalized to the Authentication schema, and have common fields like username, action, logon_type, and mfa_used. Detectors such as Stolen User Credentials or Password Spray are run against the data coming into the Authentication schema, so normalizing data correctly is critical to getting security value from your data source.

The XDR Root (aka Master) parser extracts certain common fields, which are available to all parsers without additional scripting. These are event_time and sensor_id.

Generic Schema ⫘

All syslog messages that are received by XDR through collectors and the File Upload API are normalized by default to the Generic event schema. XDR makes these messages available for context and correlation in investigations, and for use by custom rules. They only show up in a search query by using advanced search parameters. Universal syslog fields get normalized to specific fields in XDR, including sensor, sensor_type, sensor_id, host_id, and event_time_usec (not an exhaustive list). The remainder of the message is not parsed. The full raw log, which includes the rest of the message, is stored in a single field called original_data. This schema is available for custom rules, advanced search, and log retention use cases.

Parsing and Normalization to Additional Schemas ⫘

A complete list of XDR schemas supported by Custom Parsers can be found at Schemas.

Syslog messages received by XDR must be parsed and normalized to match the destination’s schema requirements. This happens in the XDR parsing and normalization engine. Syslog messages go in, normalized event data comes out and becomes available for XDR’s other functionality, including detections, search, custom rules, etc.

Security telemetry sources that are not natively supported by XDR require the configuration of a custom parser to normalize the syslog data to XDR’s additional schemas.

Detectors and Custom Parsers ⫘

Messages normalized using Custom Parsers can trigger XDR Domain Generation Algorithms, IP Watchlist, and Domain Watchlist detectors, provided the required fields are populated. The following are the required fields for each of these detectors.

Domain Generation Algorithms ⫘

| Schema | Normalized Field | Parser Field |

|---|---|---|

| scwx.dnsquery | query_name | queryName$ |

IP Watchlist ⫘

The IP Watchlist detector is compatible with messages normalized to the NIDS (scwx.nids) schema or the Netflow (scwx.netflow) schema.

| Schema | Normalized Field | Parser Field |

|---|---|---|

| scwx.nids | source_address OR destination_address | sourceAddress$ OR destinationAddress$ |

| scwx.netflow | source_address OR destination_address | sourceAddress$ OR destinationAddress$ |

Domain Watchlist ⫘

| Schema | Normalized Field | Parser Field |

|---|---|---|

| scwx.dnsquery | query_name | queryName$ |

Other native detectors are not guaranteed to be triggered, even if a data source's messages are normalized to a schema associated with a given detector. However, you can create Custom Alert Rules to generate alerts based on normalized data from a custom event data source.

Supported Syslog Message Formats ⫘

XDR’s custom parser feature supports syslog messages in CEF (Common Event Format), LEEF (Log Event Extended Format), JSON (JavaScript Object Notation), and unstructured (delimited by spaces, commas, or other common separators) formats.

Matching Your Messages to the Correct Parser Script: Confirm Strings ⫘

After separating the syslog header from the message body, the parsing engine attempts to match an incoming syslog message against a series of known patterns. These are identifiers in the message that indicate that a message comes from a known source and that we have a parser file that can manage that data. For example, syslog messages coming from a Palo Alto firewall contain the string panwlogs while no messages from other vendors should have that particular string of characters (this is simplified for the sake of brevity in this document; in reality, there are a number of other criteria that must be matched to confirm that a message comes from a PaloAlto device). The confirm string can be a regex pattern or an expression that evaluates to true or false, allowing for flexibility.

A confirm string should have the right level of specificity—neither too broad nor too specific. For example, if you were to build a parser for Hotbeam Networked Smart Toaster and use a confirm string like [Nn]etwork, that would also match messages coming from Palo Alto Network and disrupt the routing of those messages to their correct parser. That is an example of a confirm string that is too broad.

Prioritization and Overriding Default Parsing ⫘

When deciding what parser to use, the parsing and normalization engine tries to match messages to parsers that have been used recently before unused parsers. This allows the parsing engine to focus on the most likely matches.

When a match is not found in recently used parsers, the parsing engine looks through the remainder of the parser library for matches. This is done in no particular order, so it is critical that confirm strings do not cause potential collisions, as there is no guarantee that the parsing engine can find the intended parser first.

Following the Palo Alto Networks example above, if you used [Nn]etwork] for your custom parser, that potentially routes all matching messages to your custom parser. Conflicting custom parsers produce inconsistent results in terms of prioritization.

When the names of parsers match, custom parsers are prioritized over default parsers. This makes it possible to override the standard parsing for a supported data source. Best practice is to configure your data source to match the requirements for XDR's default parser, but in the unusual case where that is not possible, a new set of custom parsers can be created to handle this scenario. Note that custom parsers are not maintained or updated by Secureworks staff, so this incurs a significant risk of breaking or the custom parser getting out of sync with the official parser. This is not an officially supported workflow.

Parsing ⫘

The first step in normalization splits an incoming syslog message into its component pieces. The way this happens varies depending on the data format of the message. For example, CEF and JSON formatted messages have key:value pairs built in and generate an indexed array, while an unstructured message is turned into an unkeyed array.

When you select the data format for the incoming messages, the Custom Parser UI includes the corresponding helper function in the parser script to split the incoming message for you.

Example of the JSON parsing function:

data = "{ \"store\": { \"book\": [ { \"category\": \"reference\", \"author\": \"Nigel Rees\", \"title\": \"Sayings of the Century\", \"price\": 8.95 }, { \"category\": \"fiction\", \"author\": \"Evelyn Waugh\", \"title\": \"Sword of Honour\", \"price\": 12.99 }, { \"category\": \"fiction\", \"author\": \"Herman Melville\", \"title\" : \"Moby Dick\", \"isbn\": \"0-553-21311-3\", \"price\": 8.99 }, { \"category\": \"fiction\", \"author\": \"J. R. R. Tolkien\", \"title\": \"The Lord of the Rings\", \"isbn\": \"0-395-19395-8\", \"price\": 22.99 } ], \"bicycle\": { \"color\": \"red\", \"price\": 19.95 } } }"

json = JSON(data)

OUTPUT$ = json["$.store.book[*].author"]

# OUTPUT$: "Nigel Rees","Evelyn Waugh","Herman Melville","J. R. R. Tolkien" (String)

Normalization ⫘

After the incoming message is split, its constituent fields are combined or transformed as necessary to match the requirements of the fields in the destination event schema. A variety of helper functions are available to manage common transformations.

Example ⫘

A syslog message sends date-time data Sep 21 2018 17:35:54. Once parsed out of the incoming message, that date-time must be normalized to ISO 8601 format (don’t be thrown off by the usage of Golang’s unique date format in the second argument passed into the DATETIME() function).

data = "Sep 21 2018 17:35:54"

OUTPUT1$ = DATETIME(data, "Jan 02 2006 15:04:05")

OUTPUT2$ = data

# OUTPUT1$: 2018-09-21 17:35:54 +0000 UTC (time)

# OUTPUT2$: Sep 21 2018 17:35:54 (String)

Transformed message fields are assigned to destination schema fields.

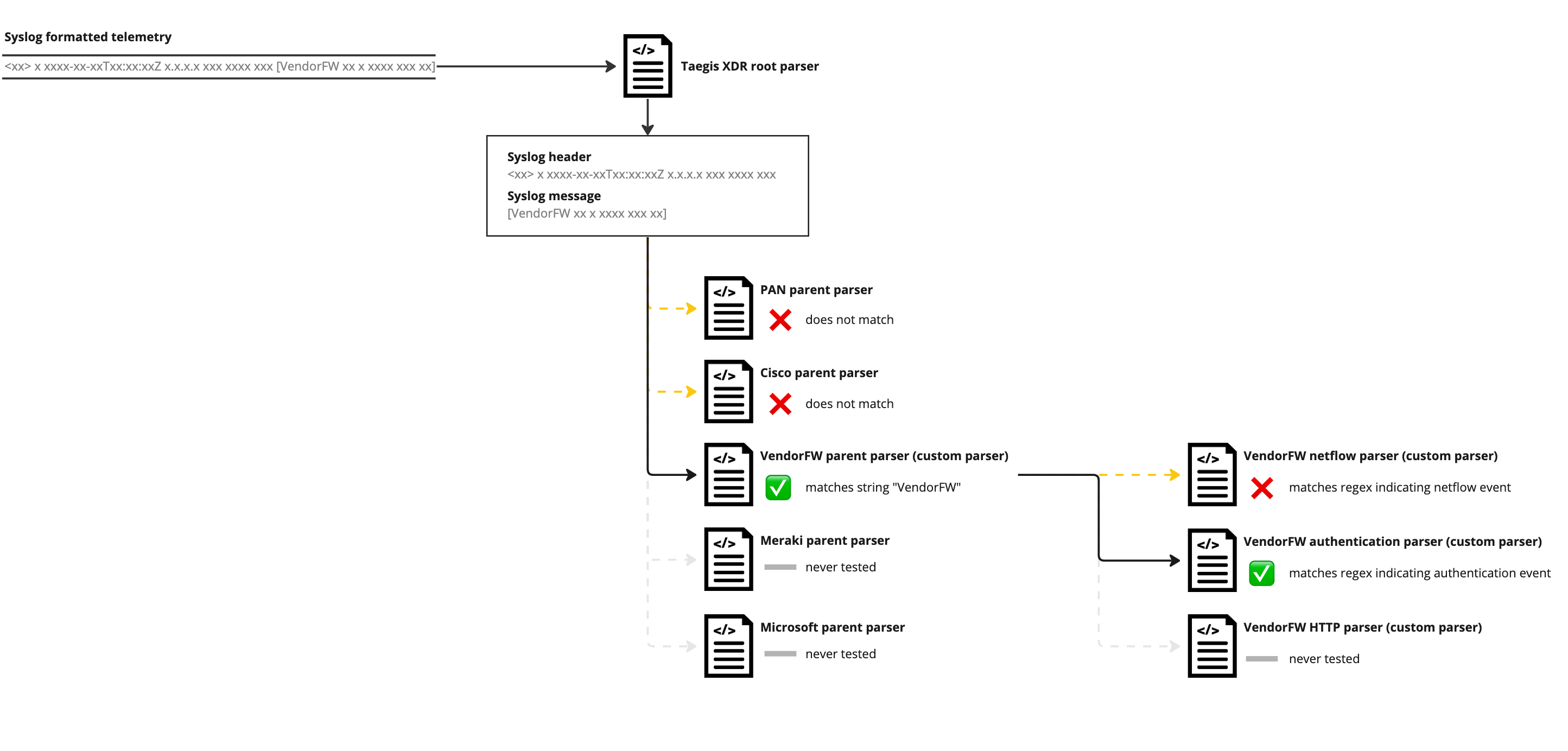

Parent and Child Parsers ⫘

Most data sources can send a variety of message types. The logs for an authentication event look different from the logs for a netflow event, and provide different opportunities for security detections. In order to make best use of the incoming data, we normalize different message types to the most appropriate event schema.

To help minimize the complexity of code for different event types, we break the normalization process into parent and child steps. Most commonly, the parent parser is responsible for identifying messages coming from a particular device or other source, while each child parser normalizes one or more message types into a single schema.

The XDR root parser is the top level parent to all other parsers. It separates and normalizes the syslog header from the message data.

For example, a firewall device has logs that map to Taegis Netflow events, Authentication events, and HTTP events. The parent parser identifies all messages coming from the firewall, while the child parsers each recognize one of the three different message types.

- VendorFW_parent (parent parser)

- VendorFW_netflow (child parser)

- VendorFW_auth (child parser)

- VendorFW_http (child parser)

A message that is sent to XDR is first parsed by the XDR root parser, which separates and parses the syslog header. The parsing engine then compares the message against the root parser’s children, which are all of the top level parsers in the system. When it finds a match with a parser’s confirm string, it then uses that parser to further parse the message. If that parser has child parsers, the process repeats.

Parent and Child Parsers

Creating, Editing, and Enabling a Custom Parser in XDR ⫘

Known Issues ⫘

- Parsers can only have one generation of parent/child relationships. A child parser cannot have further children.

- All child parsers of a given parent must be disabled or deleted before the parent can be disabled.

- The system does not enforce unique naming of parsers. Avoid duplicating parser names.

- There is no audit log for parser scripts. Changes are not tracked.

- Best practice for parent parsers is to set the destination schema to Generic. Currently, the destination schema of parent parsers must be set to Generic.

- It’s possible that messages from unsupported data sources may be matched by existing parsers. In this case, a message could be parsed and normalized in an unintended way. Because of the indeterminate nature of parser selection, it is recommended to test your sample messages several times. Each test increases the chance that an unintended match will be caught. 12-15 repeat tests typically reduce the chance of an uncaught mismatch to acceptable levels (>99.9% certainty). In the event of this kind of conflict the recommended approach is to contact Product Support and file a ticket in order to have the existing parser analyzed and its specificity adjusted to exclude the custom data source.

Creating a New Custom Parser ⫘

- Select Integrations from the left-hand navigation choose Custom Parsers.

- In the upper right, select Add Custom Parser.

- Name your custom parser. Recommended format is:

[PRODUCT_NAME]_[FORMAT_NAME]_[PARENT|CHILD_TARGET_SCHEMA].

Examples ⫘

FUNBEAM_SMART_TOASTER_CEF_PARENT

PAN_FW123456_JSON_CHILD_AUTHENTICATION.

- Select whether it’s a Parent, Child, or Standalone parser:

- Parent parsers default to Generic event schema. Assume that you are creating child parsers to normalize incoming messages to multiple schema.

- Child parsers require a parent parser to be selected, and should have their final destination schema selected.

- A standalone parser is used for telemetry data sources that only have one message type and normalize to only one schema.

- Select the source data format. This populates the generated parser boilerplate script with the correct helper function in order to split the incoming message.

- Select the destination schema.

Note

Best practice for parent parsers is to set the destination schema to Generic.

- Select Create. A script editor screen displays with a pre-formatted boilerplate to get you started writing your parser.

Writing a Custom Parser ⫘

Best Practices ⫘

-

The top section of your custom parser should contain any required bang (!) declarations.

-

Add an appropriate splitter function for the selected syslog data format.

-

Add any variable declarations and content manipulations that are necessary to format the data for the destination schema or pass it to child parsers.

-

protobufdeclarations determine the data that gets passed into the event schema.

These best practices can help with readability, maintenance, and troubleshooting.

Set Your !CONFIRMWITH and !CONFIRMSTRING ⫘

Parent parsers are usually set to conform using a regex pattern by declaring !CONFIRMWITH=PATTERN. Write a regex pattern that matches a key part of all of the messages that you want to normalize with this custom parser. Typically this matches the vendor name and product as found in the syslog messages received from the data source.

Important

The regular expression syntax supported by Taegis XDR Custom Parsers is the Golang variant.

Example:

!CONFIRMWITH=PATTERN

!CONFIRMSTRING=\|Imperva Inc.\|SecureSphere-MX

would match |Imperva Inc.|SecureSphere-MX in the following sample log:

Nov 11 15:55:25 172.16.11.99 CEF:0|Imperva Inc.|SecureSphere-MX|13.0.1|Correlation|sql-failed-login|Medium|act=None dst=172.16.3.45 dpt=1433 duser=Multiple src=172.16.3.51 spt=47635 proto=TCP rt=Nov 11 2022 06:05:08 cat=Alert cs1=SQL Correlation Policy cs1Label=Policy cs2=Group1 cs2Label=ServerGroup cs3=sql-service cs3Label=ServiceName cs4=Default SQL Application cs4Label=ApplicationName cs5=SQL Failed Login cs5Label=Description

Child parsers are usually set to confirm using an expression that evaluates to TRUE that is derived from variables set in the parent parser, and passed to the child parser. This is done using normal comparison operators.

Example:

!CONFIRMWITH=EXPRESSION

!CONFIRMSTRING=eventType$=="AUTH"

If the parent parser declared a variable called eventType$ AND it was set to a string with the value of AUTH, this evaluates to TRUE and the child parser can be used to normalize the message.

Secureworks recommends that you test your parser at this point to see if the Confirm String works. See Testing a Custom Parser below.

Setting Variables and Protobuf Fields ⫘

A variable can be declared at any point in the file. It can be used for operations including comparison and concatenation, being passed into a helper function, or any other operation a variable is valid for. A variable is only used within the parser script(s), and is not passed to the destination schema.

A Protobuf field ends in a dollar-sign ($). Protobuf fields are reserved for data passed into the destination schema. Declarations not matching the destination schema are ignored. For example, when normalizing to the Authentication schema, encryption_type$ is a valid protobuf field because it matches a field in the schema. my_snazzy_field$ is not a valid protobuf field, and is ignored by the normalizer.

Protobuf fields map 1:1 to schema fields, but the syntax is slightly different. Field names in the schema are in snake_case, while the equivalent Protobuf field is in camelCase and ends in a dollar sign. So event_time_usec as a schema field maps to eventTimeUsec$ as a Protobuf field.

Variables and Protobuf fields are passed from parent to child, so a variable or Protobuf field that is set in a parent parser is available for use in all of its descendants.

Common Operations and Functions ⫘

Saving a Custom Parser ⫘

The testing tools test whatever is currently loaded in the script editor interface. Testing does not save the script. This allows you to test changes to an active parser without changing its behavior in the production environment.

To save the parser, select Save and Continue. If your parser is disabled, it can be saved in an invalid state. If the parser is enabled, the system returns an error and prevent you from saving your parser in an invalid state.

Exiting the Edit Interface ⫘

Select the Exit button to return to the Custom Parsers screen. Any unsaved changes to the parser will be lost.

Sample Messages ⫘

Test your parser script frequently while editing. To test, you need at least one sample message.

Original Data Method ⫘

If you already have data being sent to XDR, the best option is to copy the original data from an event and paste it into the sample message field. To find an event, go to Advanced Search and use the query FROM Generic WHERE sensor_id='[my data source]', replacing [my data source] with the name of the product sending logs to XDR as defined in the product’s logs.

See an example in XDR.

Open one of the events and navigate to the Original Data tab, then use the Copy Original Data button. Paste this data into the Sample Message field in your Custom Parser's edit screen. Be sure to use an event that has all of the data you'll want to parse.

Copy Log Method ⫘

If you have not started sending data from your data source, you can still test your data, although it's recommended to start by sending the data to help rule out connection issues while troubleshooting. Copy a single line log message—not a whole log file—into the sample message field. Sample messages are meant for single line logs, not an entire log file.

Dummy Sample Message Data ⫘

If you don’t have sample data yet, you can still play around with Custom Parsers using the following log messages:

CEF:

Nov 11 15:55:25 172.16.11.99 CEF:0|Imperva Inc.|SecureSphere-MX|13.0.1|Correlation|sql-failed-login|Medium|act=None dst=172.16.3.45 dpt=1433 duser=Multiple src=172.16.3.51 spt=47635 proto=TCP rt=Nov 11 2022 06:05:08 cat=Alert cs1=SQL Correlation Policy cs1Label=Policy cs2=Group1 cs2Label=ServerGroup cs3=sql-service cs3Label=ServiceName cs4=Default SQL Application cs4Label=ApplicationName cs5=SQL Failed Login cs5Label=Description

JSON:

{"GUID":"8mr-Qf2IaEGl8ZrAgApMtUpJ1WE6MNw3","QID":"3m082vh0wa-1","ccAddresses":[],"cluster":"embdtech_production-vm","completelyRewritten":false,"fromAddress":["onedrive-storage-centers@post.com"],"headerFrom":"Portal Service \u003cOneDrive-storage-centers@post.com\u003e","headerReplyTo":"OneDrive-storage-centers@post.com","id":"17c704dd-32d7-7a7e-aabd-60bc25d6d7b8","impostorScore":0,"malwareScore":0,"messageID":"\u003cz2syHcM5R-KNfBRt164SIg@geopod-ismtpd-3-0\u003e","messageParts":[{"contentType":"text/plain","disposition":"inline","filename":"text.txt","md5":"02fb21a1047b2156d299dbbd5128eeeb","oContentType":"text/plain","sandboxStatus":null,"sha256":"46e52ba50776ff8b95baee20c8f7d2880653c02e60aeb5b7d2b9adce97d8eca2"},{"contentType":"text/html","disposition":"inline","filename":"text.html","md5":"cf84f8757abdbd98c0557b4fb595fc31","oContentType":"text/html","sandboxStatus":null,"sha256":"fbce44eb0a20aa14f8c4270a387ef8f7b86fa6df8227824555eac77286d6efbd"}],"messageSize":7320,"messageTime":"2022-11-22T16:08:29.000Z","modulesRun":["av","zerohour","dkimv","spf","spam","dmarc","pdr","urldefense"],"phishScore":100,"policyRoutes":["default_inbound"],"quarantineFolder":"Phish","quarantineRule":"inbound_passive_phish","recipient":["asmith@embdtech.com"],"replyToAddress":["onedrive-storage-centers@post.com"],"sender":"bounces+30215492-d119-asmith=embdtech.com@sendgrid.net","senderIP":"167.22.22.22","spamScore":0,"subject":"A notice sent to your mail-box asmith@embdtech.com just\r\n arrived","threatsInfoMap":[{"campaignID":null,"classification":"phish","threat":"bafybeidonkf2nkpftvrxzpjziloxhgjzdr4behzpikhktcsntarflqhkby.ipfs.w3s.link/","threatID":"3e453ec875e5d875d9a97bd2607e2b98e9915d3fbb01dd8c8e1b9314564c5c7b","threatStatus":"active","threatTime":"2022-11-22T15:38:23.000Z","threatType":"url","threatUrl":"https://threatinsight.proofpoint.com/44b3419d-b1e9-7db6-d77e-7a5cf27f7cf2/threat/email/3e453ec875e5d875d9a97bd2607e2b98e9915d3fbb01dd8c8e1b9314564c5c7b"}],"toAddresses":["asmith@embdtech.com"],"xmailer":null}

Testing ⫘

Once you’ve loaded a sample message, select the Parse button. If your message is well formed, results in the Parsed Message table display that show the key:value relationships between the fields (in the case of structured message formats like CEF, LEEF, and JSON), or you should see an indexed breakdown of the fields (in the case of an unstructured format).

Add a variety of sample messages to ensure that the range of data sent is parsed correctly. Two to five is typically the right number of sample messages. If you’re using child parsers, do this at both the parent and child parser level. Once you've got a representative set of sample messages, use the Run Test button to see the event output for all of the sample messages.

Test Results ⫘

When you press the Parse Message button, the test results will show in the abbreviated tables below the message. From here you'll see whether your parser is generating any results at all. Once those tables are populating, you can expand them to see the full results. The Parsed Source Message table shows how the message is broken down into segments by the splitting function. The Source Destination Mapping table shows how transformed source data is assigned to event schema fields.

The Extractor Path shows exactly which parsers were used to parse the message. It's possible to create a situation where a message could be parsed by more than one available parser, and this is where you'll see if an unexpected parser is picking up your sample message. For processing efficiency, the parsing engine randomizes the parsers it attempts to use until it finds a match, so it's possible to see different results each time the Parse Message button is pressed. Running the Parse Message function several times should show if this will be the case.

Pressing the Run Test button will test all sample messages for this Custom Parser, and will output the results similarly to an event search. It will also show what event schema the message is normalized to, as well as the Extractor Path.

Exit the Edit Screen ⫘

Click the Exit button to leave the Parser Edit screen and return to the Custom Parsers screen to perform additional actions. Unsaved changes are lost.

Enabling a Custom Parser ⫘

By default, a custom parser is disabled. This gives you time to edit and test it using the GUI tools in XDR before turning it on. Once you are confident that your custom parser is ready, return to the Custom Parsers screen to enable it.

Enabling your parser is non-destructive. Data coming into XDR continues to create the Generic events that are currently created, so there is no risk in enabling, other than those risks already outlined around parser naming collisions.

Once your parser is enabled, events are created immediately. You can verify this by waiting a few minutes for data to arrive and then searching for WHERE sensor_type=[your_sensor_type]. If data has been received by XDR, search results return that are normalized to the correct event types.

Edits to an enabled custom parser take effect immediately. Exercise caution when editing. The system returns an error if you attempt to save an invalid parser over an active parser. However, it’s possible to write a valid parser that puts the wrong information into the destination schema fields.

When enabling parent and child parsers, the parent must be enabled before any children.

Disabling a Custom Parser ⫘

Custom parsers can be disabled from the same interface. Once disabled, the parser stops producing events immediately. Generic events are still created as normal, but events are no longer sent to the additional schema specified in your disabled parser.

Deleting/Archiving Custom Parsers ⫘

Select the Trash icon in the Actions column of the Custom Parser’s screen to delete the custom parser. This is a non-recoverable deletion.